Back on this.

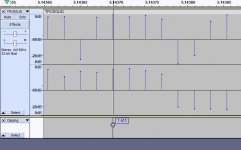

View attachment 431141 This slice is 2.5 TEN THOUSANDTHS of a second. It shows the single data point which is clipping. There are three such data points in the entire sample. If all three were in the same shot sample and you trimmed that sample to 10 ms, how loud (what sound level would they have to be) to alter the result significantly?

Tomorrow I am going to do the test again. I am going to set my sampling software

"Parrot" to attenuate the signal by -10 dB and change the sampling location to be ten yards down range and at about 1 o'clock (so 30 degrees clockwise). If that clips I will set the attenuation to -20 dB and continue the process until I have eliminated clipping. I don't like throwing away data either and adding attenuation "feels" like that to me.

Will I loose more information by allowing those three clipped points or attenuating the entire sample by -20 dB?

I see your point, but do we actually know how high the point was originally? We don't yet. The information is lost forever. If you clip at 10 dB and 20 dB, then something is definitely weird. Too much attenuation is bad too. Let you know why in a paragraph or two.

It's one of life's unfortunate trade offs when sampling impulsive stuff, do we give up the top end or the bottom end? Impulsive signals are really hard, because they require transducers with good dynamic range. My IMM-6 microphone has 70dB dynamic range, for what it is worth. Do you know what your microphone dynamic range is? Maybe I will run into the same problem.

But remember the old radar guy's trick. (That's me, if you couldn't figure it out.)

As long as the microphone can still detect the background noise (so you see bits twiddling during the inter shot period) and not clip, you can recover the information. The "signal" may look like junk in the time domain, but we can get the spectra simply because of the process gain of the FFT. A 16K FFT has 10*log10(16,384) = 42dB of process gain

in every bin. So we could easily recover stuff that had a -20 dB SNR in the time domain, but it would show up as +22dB SNR in the time domain. If we need more process gain, we need to use a longer FFT.

The idea is to back off in distance from the source until we don't clip for the loudest object we wish to use as a reference, be it a bare shot, or a reference LDC. It's not good to use an attenuator, because it is possible attenuate the microphone noise below that of the least significant bit of the ADC.

But it is ok to use an attenuator to see how much attenuation is needed to not clip. But then one should back away from the source the distance corresponding to that attenuation and

remove the attenuator. The sound should drop off as 1/(r^2) or -6 dB for every doubling of the range. Radar drops off at -12 dB for doubling of the range since the return is 1/(r^4). So if the mic is at 5m, setting the mic at 10m will result in 6dB attenuation from whatever it was at 5m. All we need to do is make sure we don't clip. Then we get to the next important thing.

We want microphone noise, more specifically, noise that the microphone hears in the background to be no less than 1.4 bits RMS of the ADC bits. If that is possible, full signal recovery is possible, to the extent of the FFT gain. Sorry to be so technical, but this works. I've used this in in military and commercial applications, including automotive radars at 24 & 77 GHz. My company sold 10's of millions of automotive radars a year. My designs had runs of over several million.

Systems that are developed to measure gunshot noise (for characterizing range hearing safety) use very high end equipment. I looked into one and it was very expensive. Now we don't have to measure sounds quite that loud, but we are facing similar tough constraints. They use special microphones and sophisticated signal processing. They also sample sound at a minimum of 200KHz, per MIL standard. That's because ultrasonic sound can still be damaging, even though we do not perceive it. Just getting a wide band calibrated mic 20 Hz to 100 KHz is a trick. My mic is calibrated from 20Hz to 20KHz. It falls off a cliff at 20KHz. Costs a bunch of $$$ to get that full bandwidth. We are attempting to do the "poor mans" version, for the purpose of reducing neighborly attaction, ire, or just trying to preserve what hearing we have left.

Just reiterating, this measurement isn't a trivial task, but I do think it is doable with some ingenuity and motivation.